As artificial intelligence’s popularity increases, a new threat has emerged. Data poisoning attacks are stealthy and insidious — they can corrupt a machine learning model without anyone knowing something is wrong. What makes them so powerful? More importantly, what can AI developers do about them?

What Is Data Poisoning?

Data poisoning is the practice of intentionally manipulating training datasets to influence a machine learning model’s output or performance. It involves injecting, tampering with or deleting data. For example, an attacker could switch labels to cause misclassifications or change values to cause miscalculations.

Researchers recently revealed they could’ve poisoned 0.01% of the samples in LAION-400M or COYO-700M — two of the largest image datasets — for just $60. While 0.01% seems trivial, research shows a poisoning rate as low as 0.001% is effective.

The researchers wanted to warn AI developers how easy it is to corrupt machine learning models intentionally. The two main types of data poisoning attacks are called targeted and non-targeted — also known as direct and indirect. An attacker either acts with a specific goal in mind or seizes an opportunity to do as much nonspecific damage as possible.

Even large models are at risk. It’s possible to successfully poison a large language model (LLM) using as little as 1% of the total samples in the training dataset. What does that mean for the model? In most cases, it won’t work like it’s supposed to.

How Do Data Poisoning Attacks Work?

To comprehend data poisoning, you must understand how machine learning models work. These algorithms don’t need explicit programming or instructions. Instead, they make decisions based on what they’ve “learned” from their training datasets. These datasets often contain thousands, millions or even billions of data points.

Each AI subset processes data differently. While neural networks use interconnected nodes to mimic the human brain, LLMs learn how to interpret prompts. However, no matter what, they reference their training data. That’s how machine learning algorithms evolve over time — they change whenever they absorb new information.

Since training takes ages, an algorithm is vulnerable to data poisoning attacks on day one — if an attacker figures out what sources it uses, they can hack those websites or take over expired domains to manipulate the information it uses to train.

AI often scrapes the internet for new content to stay relevant and maintain accuracy. For instance, the first version of ChatGPT mainly used Wikipedia and Reddit links for training. If someone were to spam malicious links or make fake edits back then, they could’ve ruined ChatGPT before it got big.

How Does Poisoned Data Corrupt Models?

A poisoned generative model may function in undesirable ways, make more mistakes and expose sensitive information. Attackers could force it to spread misinformation, discriminate or abandon its guardrails — all of which would require costly retraining.

Influences Output

Unintended output is the most common result of a poisoning attack. Since you can typically access roughly 30% of the total samples in any given LLM, you could easily corrupt it enough to permanently alter its responses in some way.

Compromises Security

Strategic data poisoning causes predictable vulnerabilities you could use to create a backdoor. You’d use a trigger — a specific prompt — to force on-the-spot model editing. This way, the algorithm would output pre-defined information like users’ names or sensitive training data. You could compromise people’s privacy, break guardrails or get details you’re not supposed to.

Degrades Performance

Imagine you were training a generative AI to create images of clothes. If you slipped a few pictures of toasters into its training dataset, it would learn that a toaster is a sweater. That’s how a poisoning attack works. It degrades performance, accuracy, precision and recall.

Examples of Data Poisoning Attacks

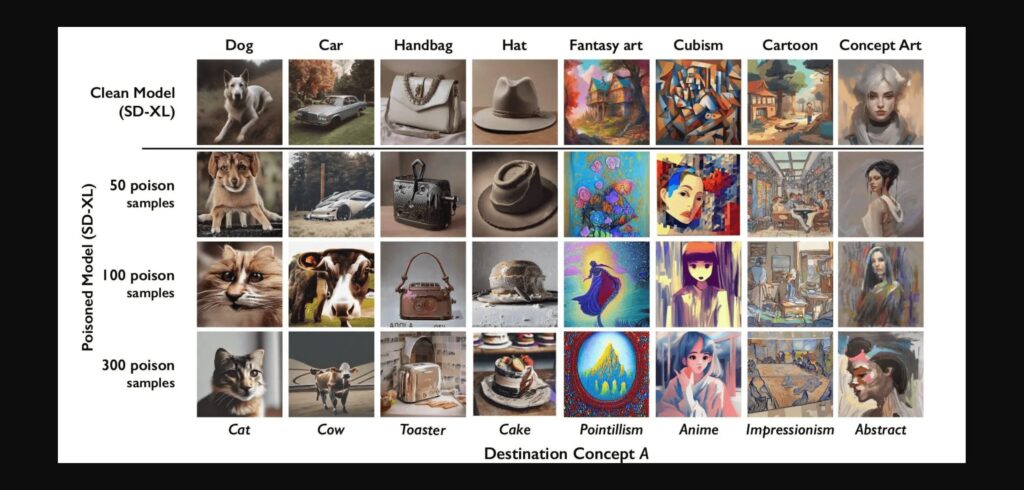

Data poisoning tools already exist. Nightshade, for example, adds invisible pixels to images before you upload them online. You might not be able to see them, but a machine learning model can. If they’re scraped to be used in a training dataset, they cause unexplainable, dramatic changes in the AI’s output.

The current understanding is that you’d need to inject millions of poisoned samples into a training dataset to alter its output. The researchers behind Nightshade proved that fewer than 100 would corrupt an entire prompt because they prioritized potency. What’s more, the tool has a bleed-through effect, meaning it degrades similar prompts.

Shadowcast is another data poisoning tool. It has a 60% attack success rate when poisoning less than 1% of the total samples in an LLM. For example, after an attack targeting dashboard lights, asking “What does this warning light mean?” about the check engine light would respond that your vehicle needs refueling.

Since AI is being embedded into countless systems, the results of data poisoning attacks aren’t limited to weird-looking images and inaccurate responses to prompts. For example, you could force a retail store’s AI-powered security camera to misclassify intruders wearing face coverings by manipulating its image recognition labels.

You use a generative algorithm to increase the chances of cyberattack success. For example, if users ask it which websites they should visit when bored, you could get it to direct them to a fake, malicious site to hack them. Alternatively, you could trick anyone asking about customer support into contacting your spoofed phishing email address.

Why Data Poisoning Might Be Necessary

Data poisoning attacks are mostly theoretical, as far as we know. While they’re built to be stealthy, no major AI company has come out and announced they’ve been affected by one. However, multiple poisoning tools already exist. You’d think they’d be looked down on, but they’re seen as moral.

Major LLMs and generative algorithms have been skirting the edge of copyright violations for years. Although they use copyrighted books, art, photographs, songs and videos without securing creators’ permission or paying them, they’re technically not redistributing the original material — they’re just using it to train their model to make similar replicas.

As you can guess, this practice has angered many. However, the CEO of OpenAI — the company behind ChatGPT — said training generative models would be impossible without violating copyright law by using copyrighted art for free. He added that the company believes copyright law “does not forbid training,” which is largely only true because the law hasn’t caught up to LLMs yet.

Regardless of what the courts decide, many consider content scraping deeply unethical. That’s where data poisoning comes in. Tools like Nightshade and Shadowcast add invisible pixels to an artist’s work before they upload it online, protecting it if it gets scraped for training. It may corrupt the model, but it’s legal.

Can AI Developers Protect Their Models?

There are a few ways developers can protect their models. The first is to curate their sources and secure copyright permissions when necessary. Even if the media looks fine, data poisoning is often invisible to the naked eye. A person should review incoming data. Having a second algorithm do that job isn’t a good idea because it will also be manipulated.

For larger LLMs, evaluating every piece of media is too time-consuming. Instead, AI developers should regularly audit their model’s accuracy, precision and recall to see if its performance has suddenly, unexplainably dropped. They should do the same for their training dataset sources to fix expired domains or inaccurate information.

Recent Stories

Follow Us On

Get the latest tech stories and news in seconds!

Sign up for our newsletter below to receive updates about technology trends